OpenAI’s Coding Gambit: Are We Trading Trust for ‘Enhanced’ AI Development?

Introduction: OpenAI has unveiled GPT-5.2-Codex, heralded as its most advanced coding model yet, boasting ambitious claims of long-horizon reasoning, large-scale code transformations, and enhanced cybersecurity. While such pronouncements invariably spark industry buzz, it’s high time we peel back the layers of hype and critically assess the tangible implications and potential pitfalls of entrusting our critical infrastructure to these increasingly opaque black boxes.

Key Points

- The claims of “long-horizon reasoning” and “large-scale transformations” represent a significant leap from current LLM capabilities, demanding rigorous independent validation beyond marketing prose.

- If proven true, GPT-5.2-Codex could fundamentally reshape software development lifecycles, leading to unprecedented productivity gains but also a critical shift in developer roles.

- The “enhanced cybersecurity capabilities” claim presents a unique and potentially dangerous liability, as AI-generated code, if not perfectly secure, could introduce systemic vulnerabilities at scale.

In-Depth Analysis

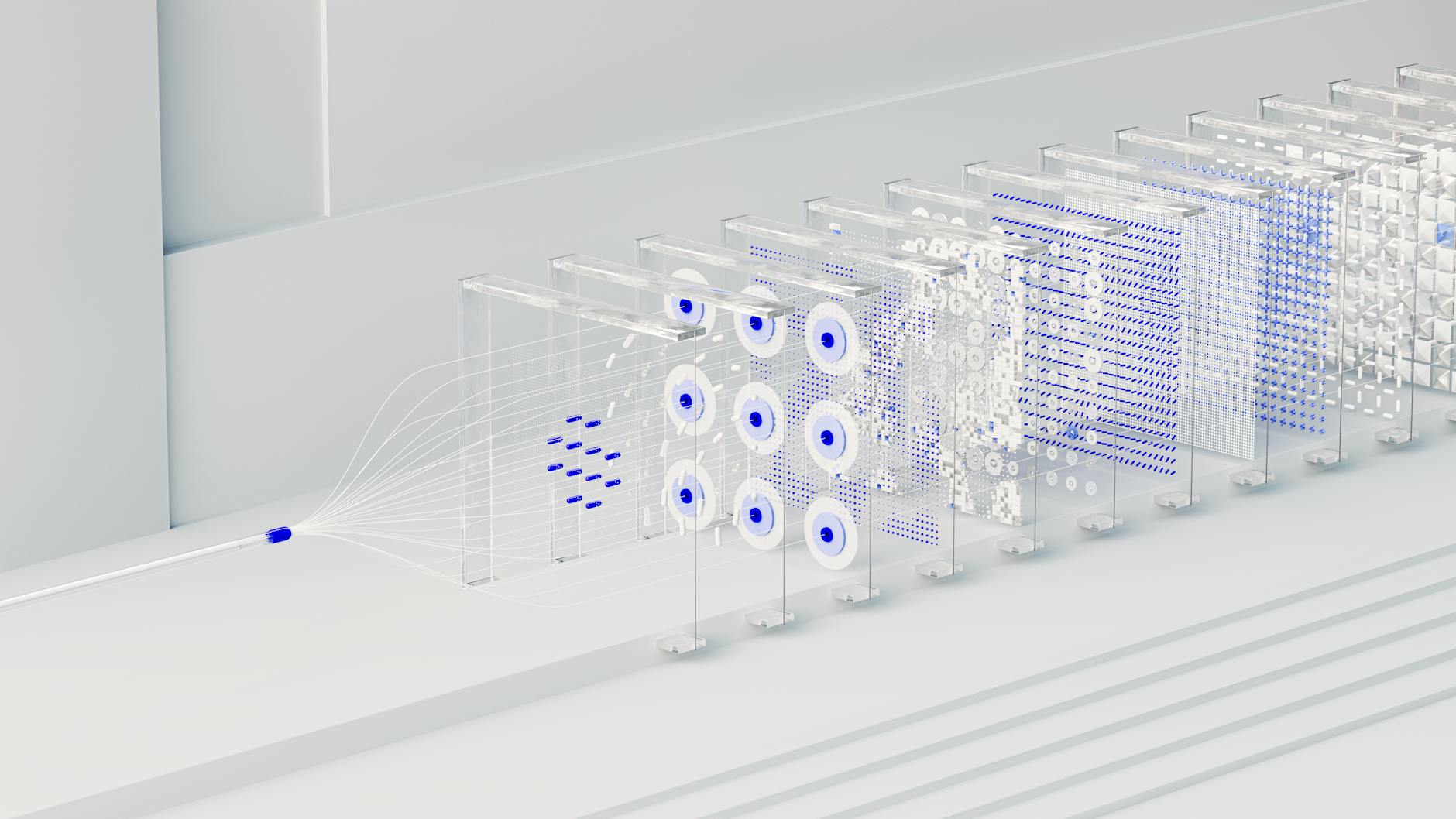

The announcement of GPT-5.2-Codex positions it as not merely an incremental upgrade but a paradigm shift in how AI interacts with code. Let’s dissect these claims. “Long-horizon reasoning” suggests the model can comprehend complex software architectures, understand multi-file dependencies, and maintain coherence across large codebases—a significant challenge for previous generative models often limited by context windows and a tendency to lose thread over extended tasks. This implies an ability to architect, not just autocomplete, potentially moving from code generation to true system design assistance.

Similarly, “large-scale code transformations” is a bold assertion. Current tools can refactor snippets or perform automated migrations with significant human oversight. GPT-5.2-Codex, by implication, could tackle wholesale language migrations, optimize performance across an entire application stack, or even adapt code for new paradigms with minimal intervention. The “how” it achieves this—whether through improved internal representations, a deeper understanding of computational semantics, or simply a larger, more finely tuned parameter space—remains largely speculative without concrete technical details. If successful, the impact on development velocity and technical debt reduction could be immense, allowing smaller teams to manage larger, more complex systems.

However, the most provocative claim is “enhanced cybersecurity capabilities.” This could mean several things: writing inherently more secure code, actively identifying and remediating vulnerabilities, or even preventing common attack vectors during generation. The potential benefits are clear, given the perpetual struggle against software exploits. But this also introduces a profound layer of risk. How do we audit AI-generated code for hidden backdoors, subtle logic flaws, or even new classes of vulnerabilities that current static analysis tools might miss? The black-box nature of these models makes trust paramount, yet verification incredibly difficult. Current AI tools for security are predominantly focused on detection, not infallible generation. Relying on an AI to guarantee security, especially in critical infrastructure, could lead to a false sense of security, inadvertently baking in vulnerabilities at the foundational level. The true impact won’t be in flashy demos, but in the long-term reliability and provable security posture of systems built with or transformed by Codex.

Contrasting Viewpoint

While OpenAI paints a rosy picture of enhanced capabilities, a skeptical eye sees potential for significant pitfalls and unanswered questions. Competitors like Google’s Gemini or Anthropic’s Claude are making strides in code generation, yet none claim this level of holistic, infallible security or architectural prowess. The primary counterargument revolves around the inherent limitations of current large language models: their propensity for “hallucinations,” their lack of true understanding (as opposed to pattern matching), and their inability to guarantee correctness or security in critical contexts. Who bears the liability when AI-generated code introduces a catastrophic bug or a subtle security flaw? The cost of rigorously verifying, debugging, and potentially rewriting AI-generated code, especially in regulated industries, might easily negate any initial productivity gains. Moreover, training data bias could inadvertently perpetuate or even introduce new vulnerabilities if the model learns from imperfect existing codebases. Scaling these capabilities to enterprise levels, managing privacy concerns for proprietary code, and the sheer computational cost of running such advanced models also present formidable practical hurdles often downplayed in initial announcements.

Future Outlook

In the realistic 1-2 year outlook, GPT-5.2-Codex, even if its claims hold water, is unlikely to entirely replace human developers. Instead, we’ll see it augment highly skilled engineers, taking over more boilerplate, repetitive, or initial scaffolding tasks. The “AI whisperer” will become a more defined role, focused on prompting, guiding, and, crucially, auditing the AI’s output. The biggest hurdles will be trust, transparency, and explainability. Enterprises will demand verifiable metrics for code quality, security, and performance generated by the model. Legal frameworks for AI-generated intellectual property and liability will need to evolve rapidly. Furthermore, the prohibitive cost of training and running such massive models, coupled with the need for specialized compute infrastructure, could limit its widespread adoption to only the largest, most well-resourced organizations initially. The journey from “most advanced” to “most trusted” is long and arduous.

For a deeper dive into the challenges of AI in enterprise development, see our analysis on [[The AI Developer Paradox: Skill Shift or Job Erasure?]].

Further Reading

Original Source: Introducing GPT-5.2-Codex (OpenAI Blog)