The AI “Cost Isn’t a Constraint” Myth: A Reckoning in Capacity and Capital

Introduction: In the breathless rush to deploy AI, a seductive narrative has taken hold: the smart money doesn’t sweat the compute bill. Yet, beneath the surface of “shipping fast,” a more complex, and frankly, familiar, infrastructure reality is asserting itself. The initial euphoria around limitless cloud capacity and negligible costs is giving way to the grinding realities of budgeting, hardware scarcity, and multi-year strategic investments.

Key Points

- The claim that “cost is no longer the real constraint” for AI adoption is largely a rhetorical reframing; the examples provided rapidly pivot to discussions of capacity limits, escalating unit costs, and the economic benefits of hybrid infrastructure.

- Hybrid cloud/on-premise strategies are emerging as the pragmatic and cost-effective default for enterprises committed to large-scale AI training and sustained operations, challenging the pure cloud-native paradigm.

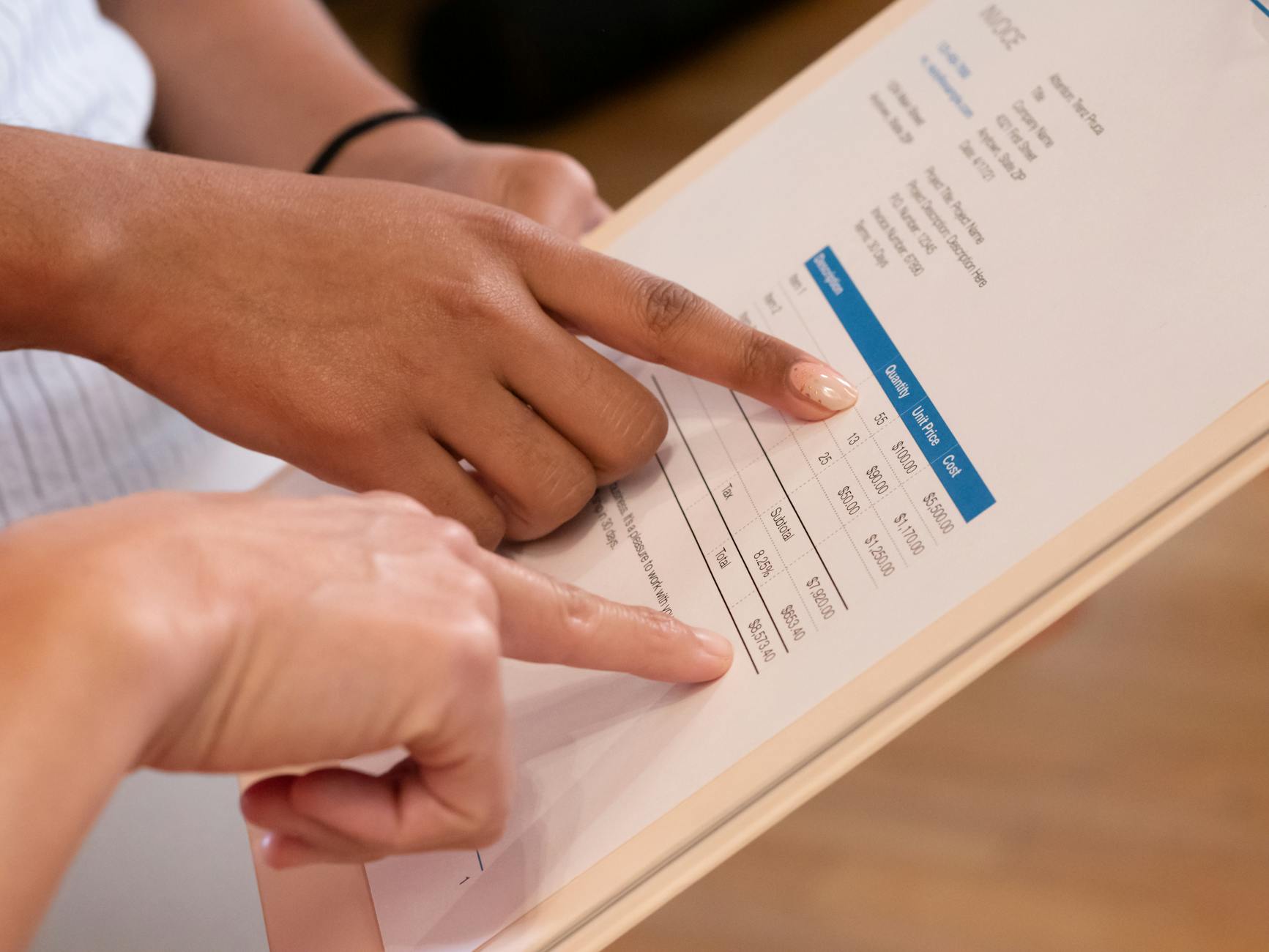

- A critical challenge facing AI-first companies is the immaturity of financial governance for dynamic, token-based AI consumption, leading to significant budgeting uncertainty and the potential for uncontrolled expenditure.

In-Depth Analysis

The prevailing sentiment that “top AI engineers don’t care about cost” is a dangerous oversimplification, if not an outright fallacy, reflecting a common pattern in nascent technology cycles. What the article presents as a disregard for cost is, upon closer inspection, a tactical prioritization of speed and capability — but one that quickly runs into the very real, albeit often deferred, financial and logistical challenges of scale. Wonder’s initial assumption of “unlimited capacity” that proved “incorrect” within a few years isn’t a testament to cost irrelevance; it’s a stark reminder that physical infrastructure limitations, whether owned or rented, are immutable. The “few cents per order” rapidly increasing to “5 to 8 cents” is a clear signal of accelerating expenditure, not a negligible sum, especially for a high-volume business.

Recursion’s journey is even more telling, a “vindication moment” for their early on-premise bet. Their current strategy, leveraging owned clusters for complex, large-scale training and cloud for flexible inference, is a textbook example of a mature IT architecture. Labelling on-premise solutions “conservatively 10 times cheaper” for large workloads and half the cost over a five-year TCO isn’t ignoring cost; it’s actively optimizing it through strategic capital expenditure. This isn’t groundbreaking; it’s the cyclical nature of compute economics, replaying itself with specialized AI hardware. The “cloud-native for everything” dogma, while excellent for agility in many scenarios, buckles under the sustained, high-intensity demands of foundation model training. The “pay-as-you-go” model quickly transforms into a “pay-through-the-nose-as-you-grow” reality for specific AI use cases, forcing a re-evaluation of total cost of ownership (TCO) that includes hardware depreciation, power, cooling, and specialized personnel. The “art versus science” of budgeting for token-based systems, especially with 50-80% of costs tied to re-sending context, highlights a profound lack of financial maturity and control that could easily spiral into unsustainable operational expenses.

Contrasting Viewpoint

While the article frames “cost isn’t a barrier” as a sign of progress, a more cynical perspective might argue it’s a symptom of early-stage exuberance, or perhaps a deliberate sidestepping of accountability. When engineers are told to “ship fast” and worry about optimization later, it often translates into accumulating technical debt that future teams or the broader business will eventually bear. The reported capacity crunches and rapid cost escalation at Wonder aren’t evidence of cost irrelevance; they are precisely the consequences of not factoring cost and capacity into initial designs. Furthermore, the notion that “top AI engineers don’t care about cost” risks fostering a culture where efficiency and resource stewardship are deprioritized, leading to inefficient model deployment, redundant compute cycles, and ultimately, a less sustainable economic model for AI at scale. The examples highlight that cost is a factor, just one that’s being strategically managed or, in some cases, poorly budgeted for.

Future Outlook

Over the next 1-2 years, the pragmatic adoption of hybrid infrastructure for AI will accelerate. Companies serious about large-scale AI development will increasingly invest in either owned or co-located specialized compute resources for foundational model training and large data processing, reserving public cloud for burstable, regional inference, or less compute-intensive tasks. The GPU market will remain intensely competitive and fragmented, with enterprises needing robust strategies for procurement and lifecycle management of these critical assets. The biggest hurdles will involve the development of more sophisticated MLOps and FinOps capabilities to effectively manage these complex, multi-environment AI pipelines. Accurate, predictable budgeting for dynamic AI workloads, especially with evolving token-based pricing, will be paramount, demanding new tools and organizational disciplines to avoid budgetary shocks and ensure the long-term economic viability of AI initiatives.

For more context, see our deep dive on [[The Enduring Cycles of On-Premise vs. Cloud Compute]].

Further Reading

Original Source: Ship fast, optimize later: top AI engineers don’t care about cost — they’re prioritizing deployment (VentureBeat AI)